What’s in a camera? A lens, a shutter, a light-sensitive surface and, increasingly, a set of highly sophisticated algorithms. While the physical components are still improving bit by bit, Google, Samsung and Apple are increasingly investing in (and showcasing) improvements wrought entirely from code. Computational photography is the only real battleground now.

The reason for this shift is pretty simple: Cameras can’t get too much better than they are right now, or at least not without some rather extreme shifts in how they work. Here’s how smartphone makers hit the wall on photography, and how they were forced to jump over it.

Not enough buckets

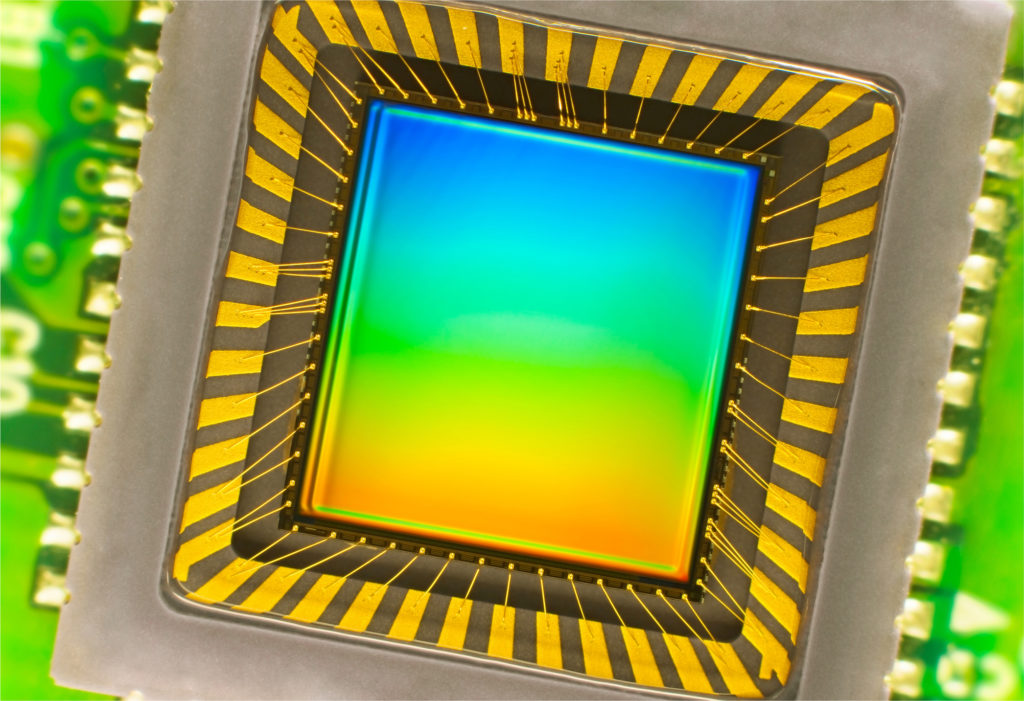

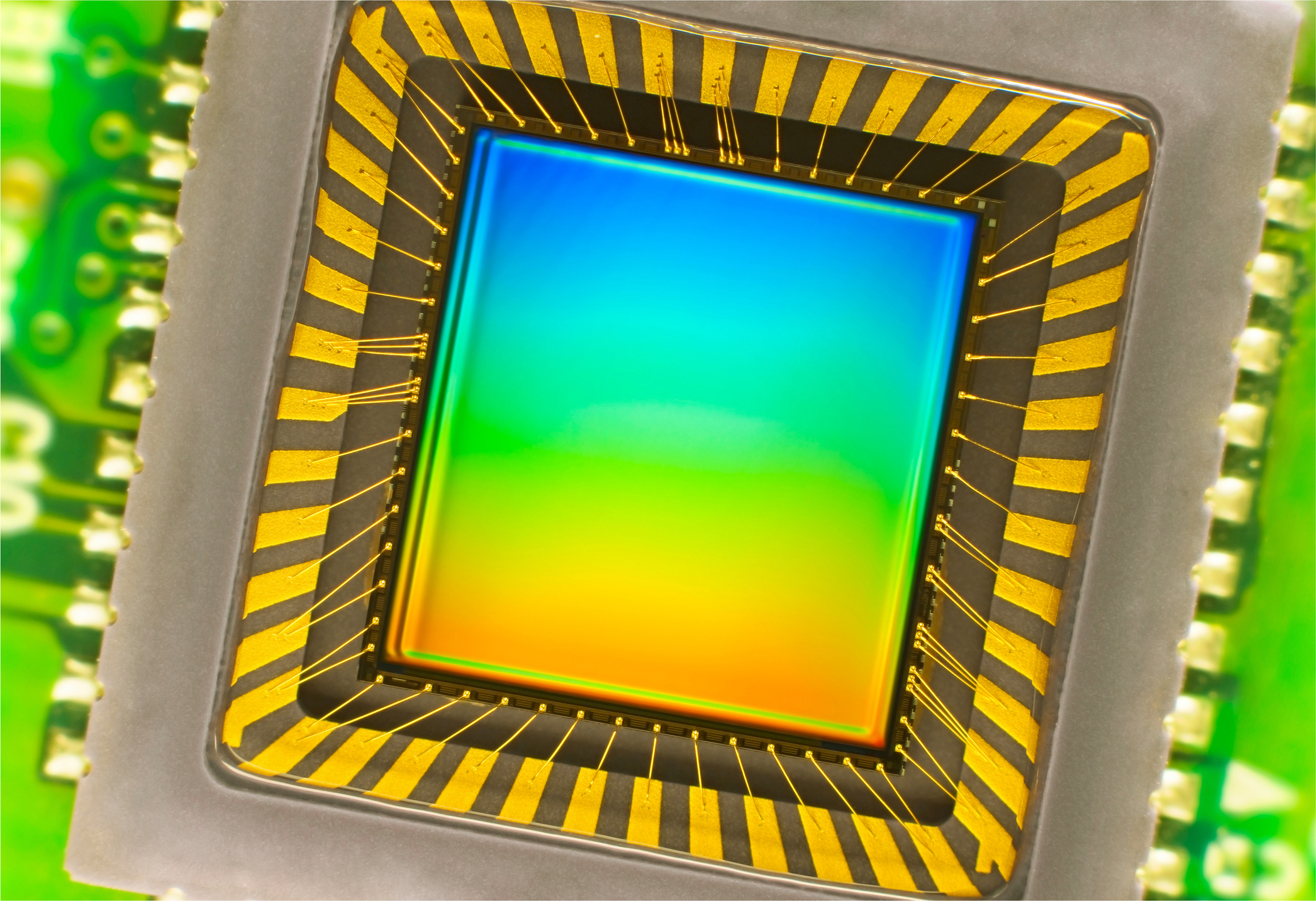

An image sensor one might find in a digital camera

The sensors in our smartphone cameras are truly amazing things. The work that’s been done by the likes of Sony, OmniVision, Samsung and others to design and fabricate tiny yet sensitive and versatile chips is really pretty mind-blowing. For a photographer who’s watched the evolution of digital photography from the early days, the level of quality these microscopic sensors deliver is nothing short of astonishing.

But there’s no Moore’s Law for those sensors. Or rather, just as Moore’s Law is now running into quantum limits at sub-10-nanometer levels, camera sensors …read more

Source:: TechCrunch Gadgets

Previous post

Previous post