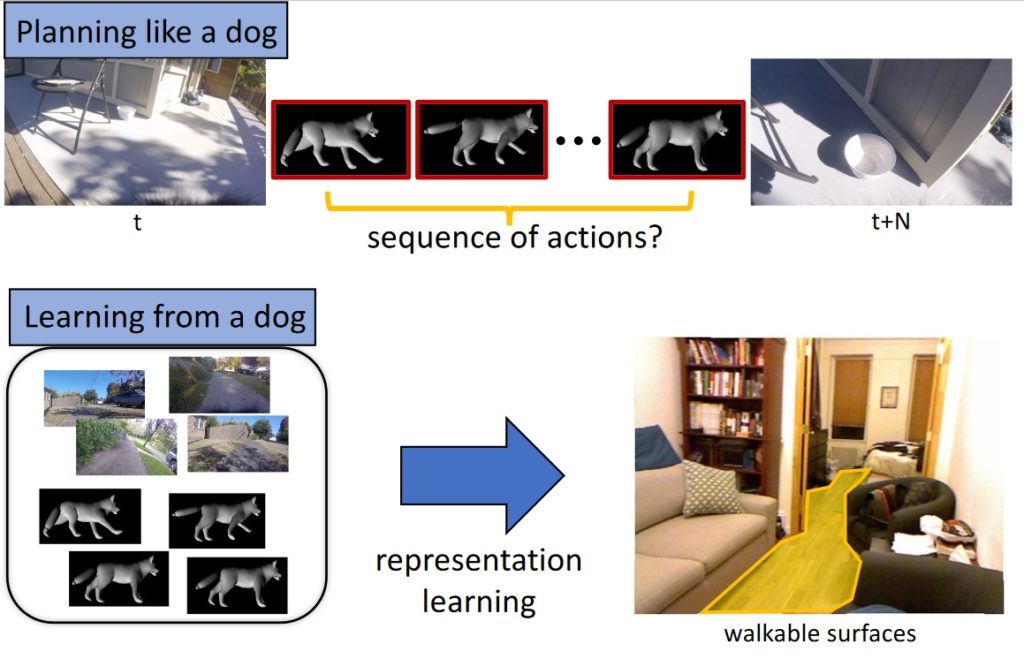

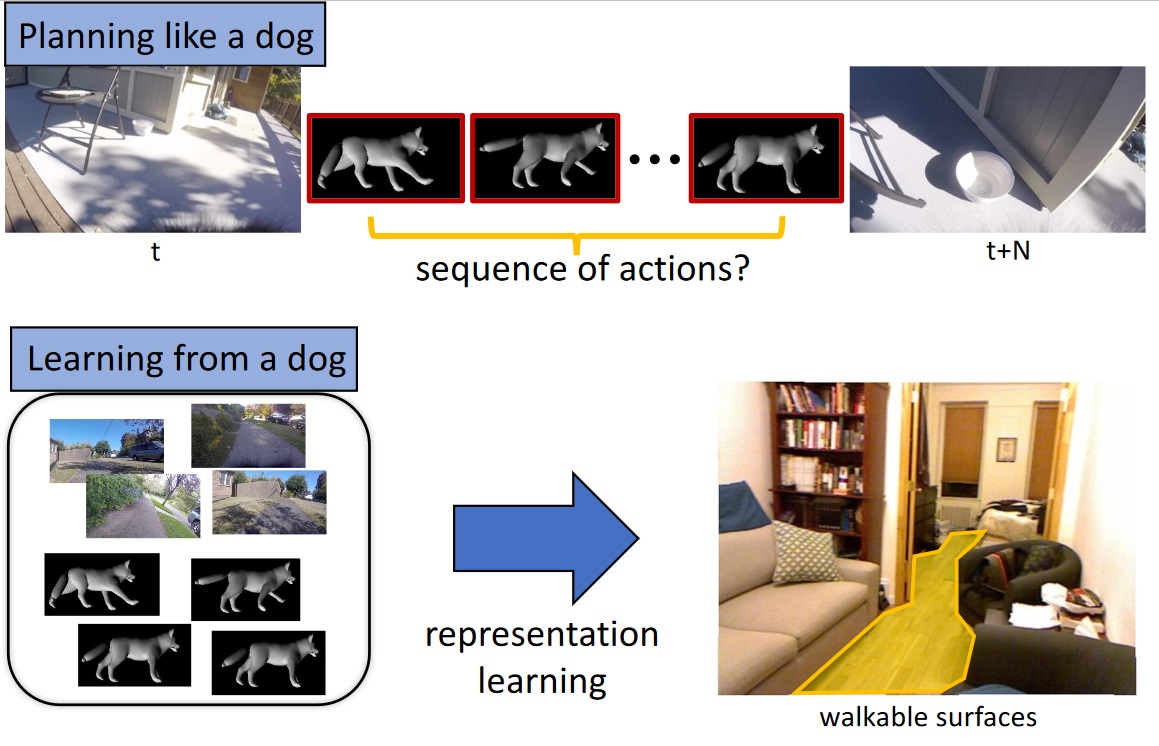

We’ve trained machine learning systems to identify objects, navigate streets, and recognize facial expressions, but as difficult as they may be, they don’t even touch the level of sophistication required to simulate, for example, a dog. Well, this project aims to do just that — in a very limited way, of course. By observing the behavior of A Very Good Girl, this AI learned the rudiments of how to act like a dog.

It’s a collaboration between the University of Washington and the Allen Institute for AI, and the resulting paper will be presented at CVPR in June.

Why do this? Well, although much work has been done to simulate the sub-tasks of perception like identifying an object and picking it up, little has been done in terms of “understanding visual data to the extent that an agent can take actions and perform tasks in the visual world.” In order words, act not as the eye, but as the thing controlling the eye.

And why dogs? Because they’re intelligent agents of sufficient complexity, “yet their goals and motivations are often unknown a priori.” In other words, dogs are clearly smart, but we have no idea what …read more

Source:: TechCrunch Gadgets

Previous post

Previous post

Next post

Next post